R1HIM

Developer

Projekte

Mein Name ist Oleg Rakhimov (R1HIM). Hier finden Sie meine Projekte, Medien (Videos/Screenshots/PDF) und Kontaktmöglichkeiten.

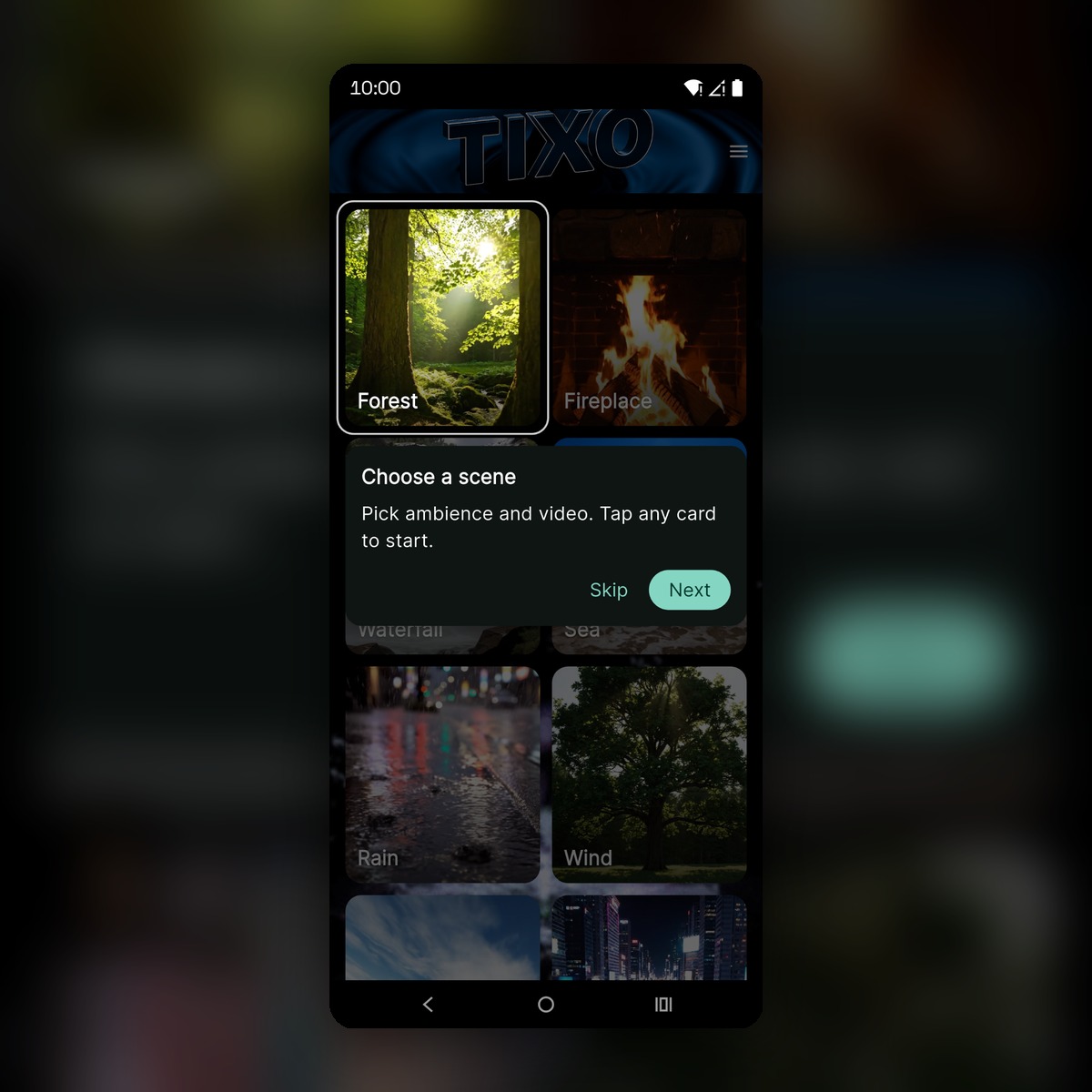

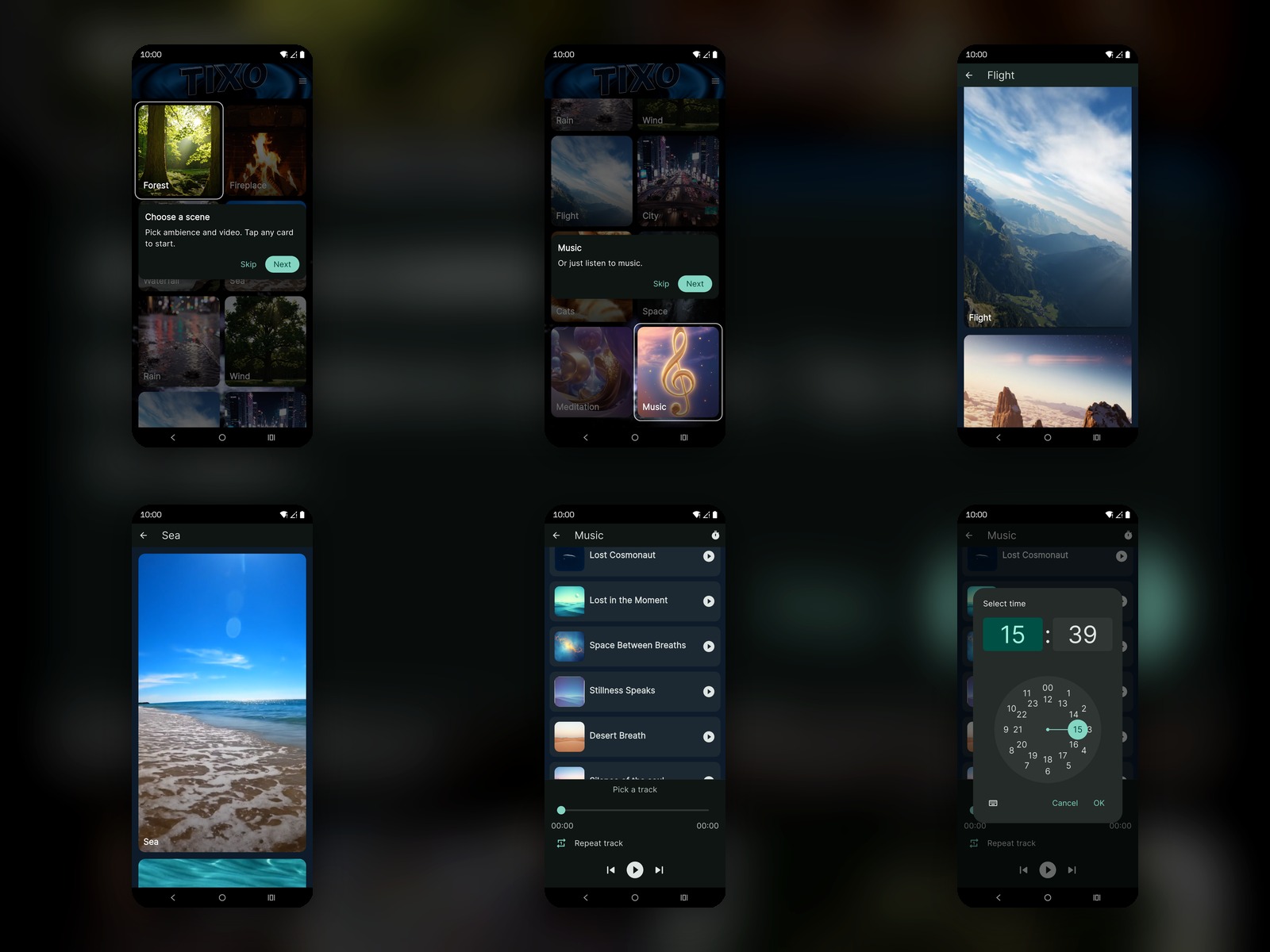

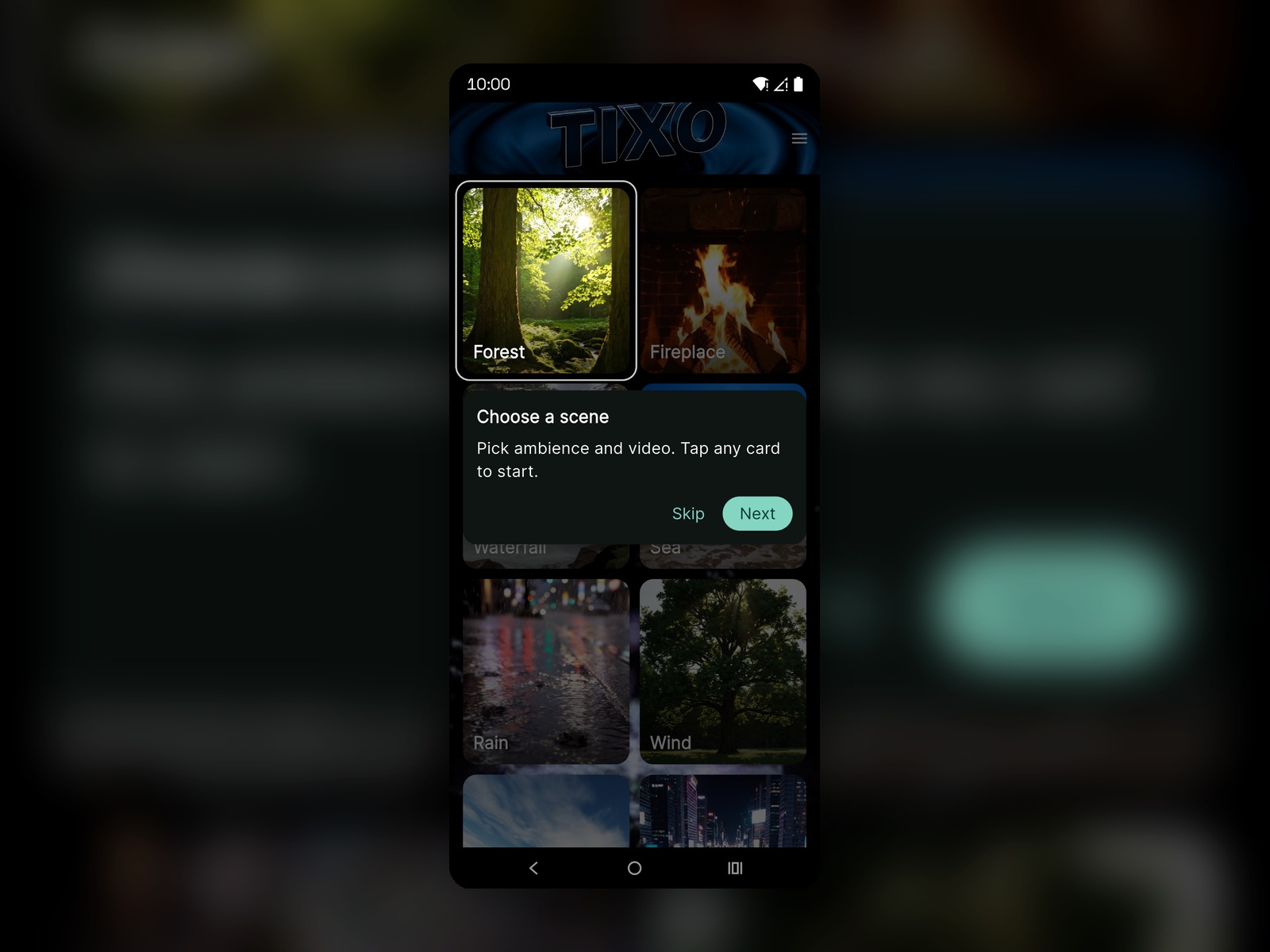

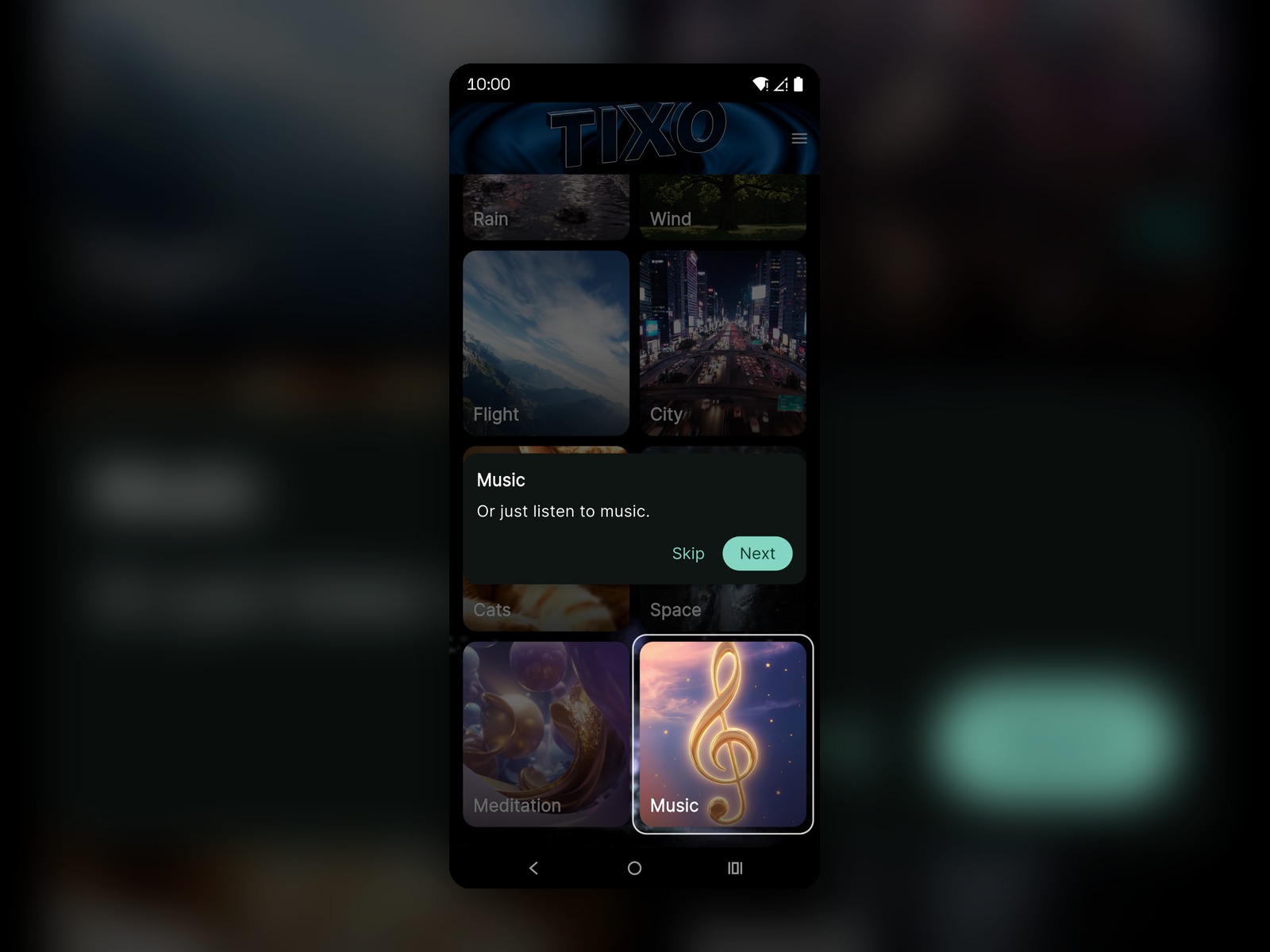

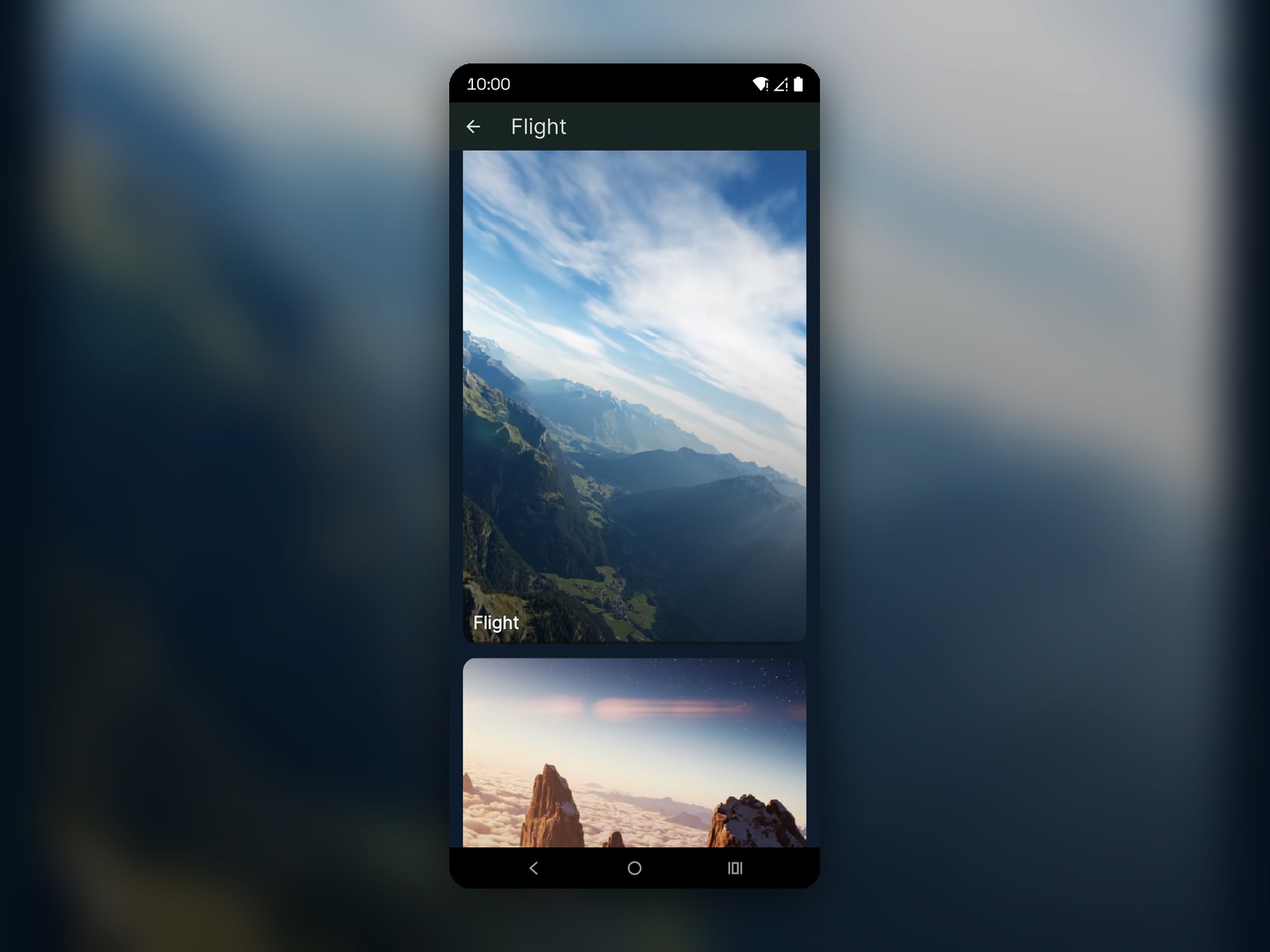

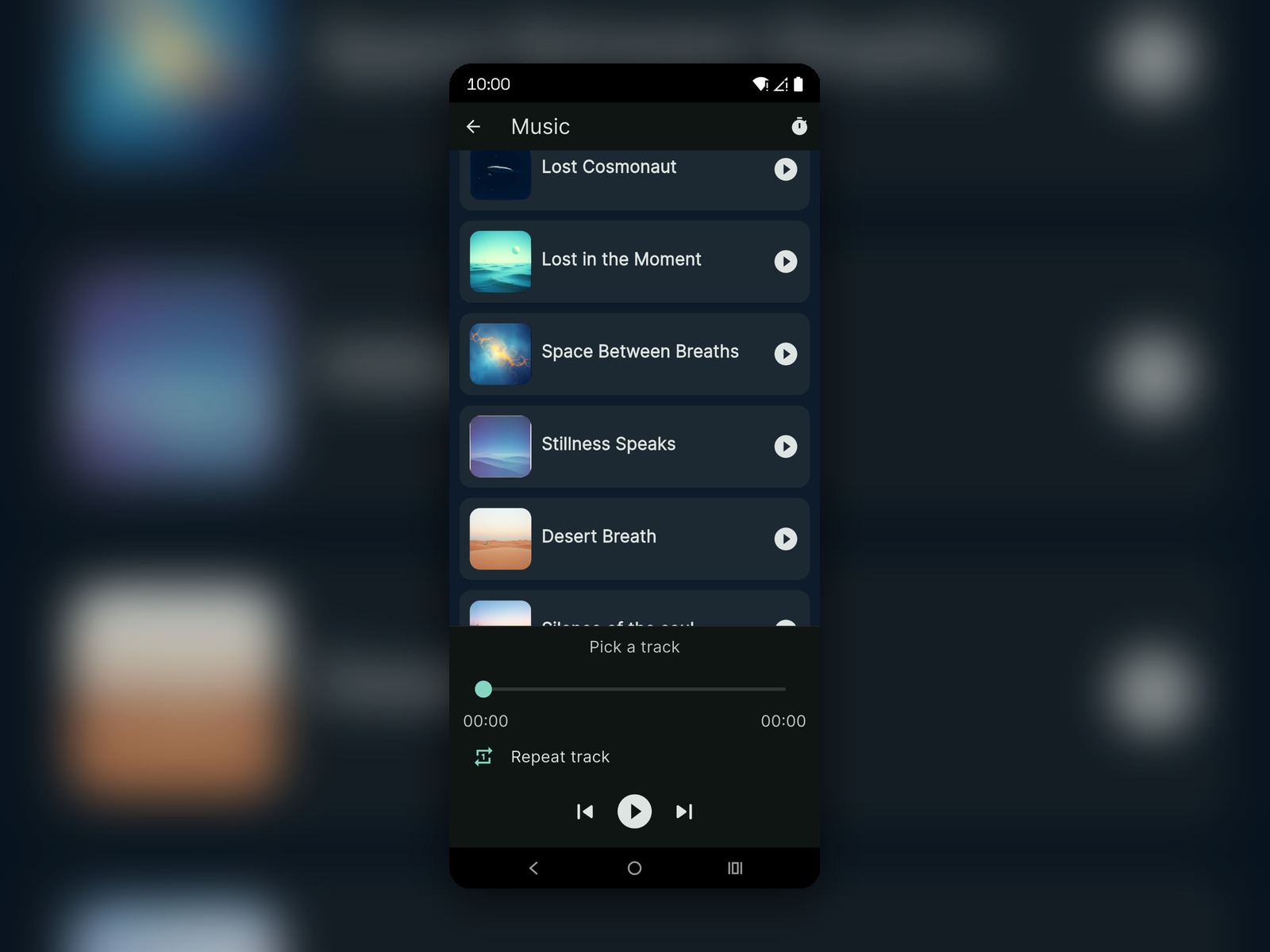

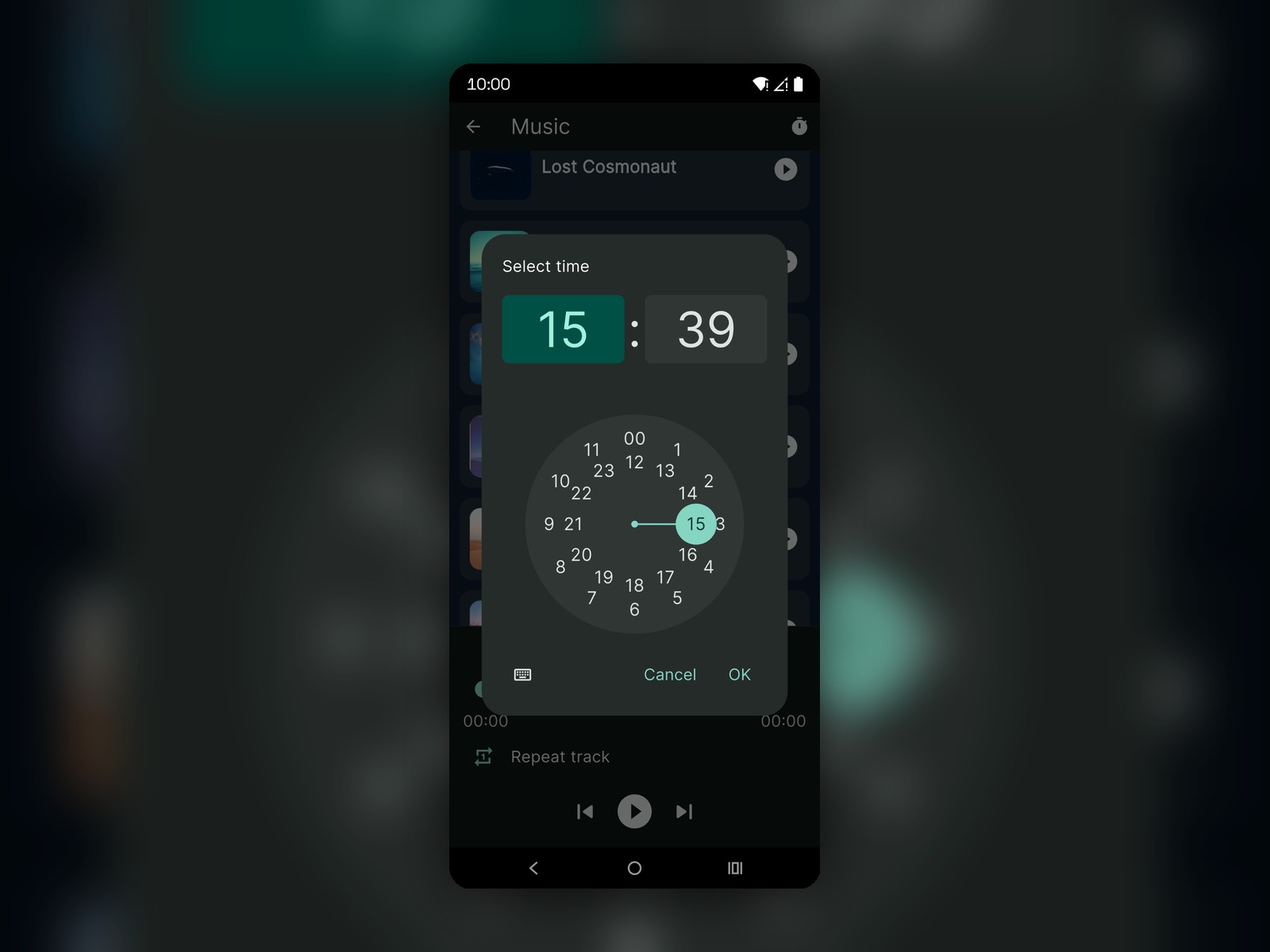

TIXO — Ambient-Szenen & Musikplayer

Relax- und Ambience-App mit auswählbaren Szenen (Video-Hintergründe), integriertem Musikplayer und Session-Timer.

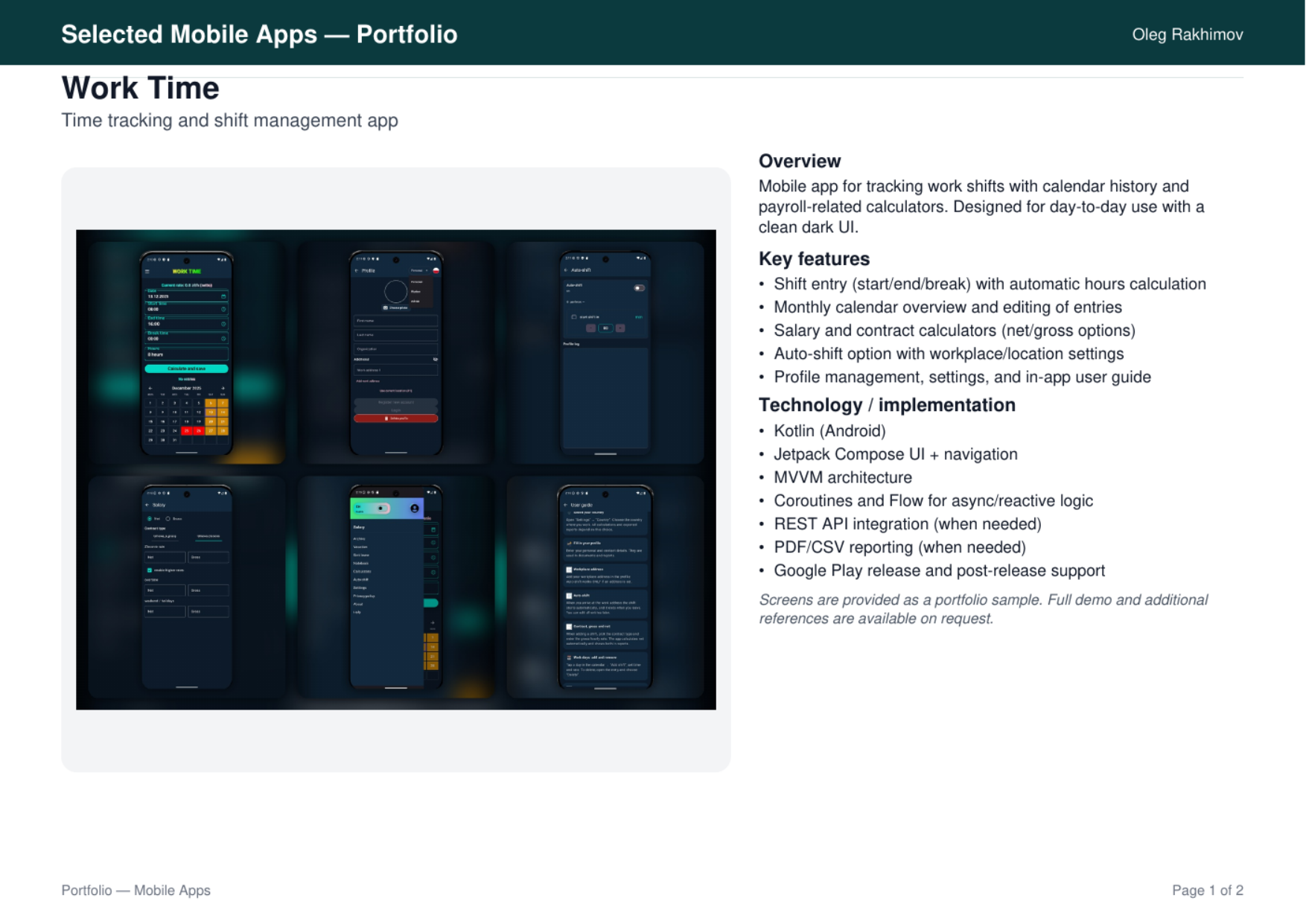

Work Time — Schicht-Tracking und Berechnungen

App zur Schichtverwaltung: Kalender, Historie, Stundenberechnung und lohnbezogene Rechner.

- Schichteingabe (Start/Ende/Pause) mit automatischer Stundenberechnung

- Monatskalender und Bearbeitung von Einträgen

- Rechner (netto/brutto), Einstellungen, Profil und In-App-Handbuch

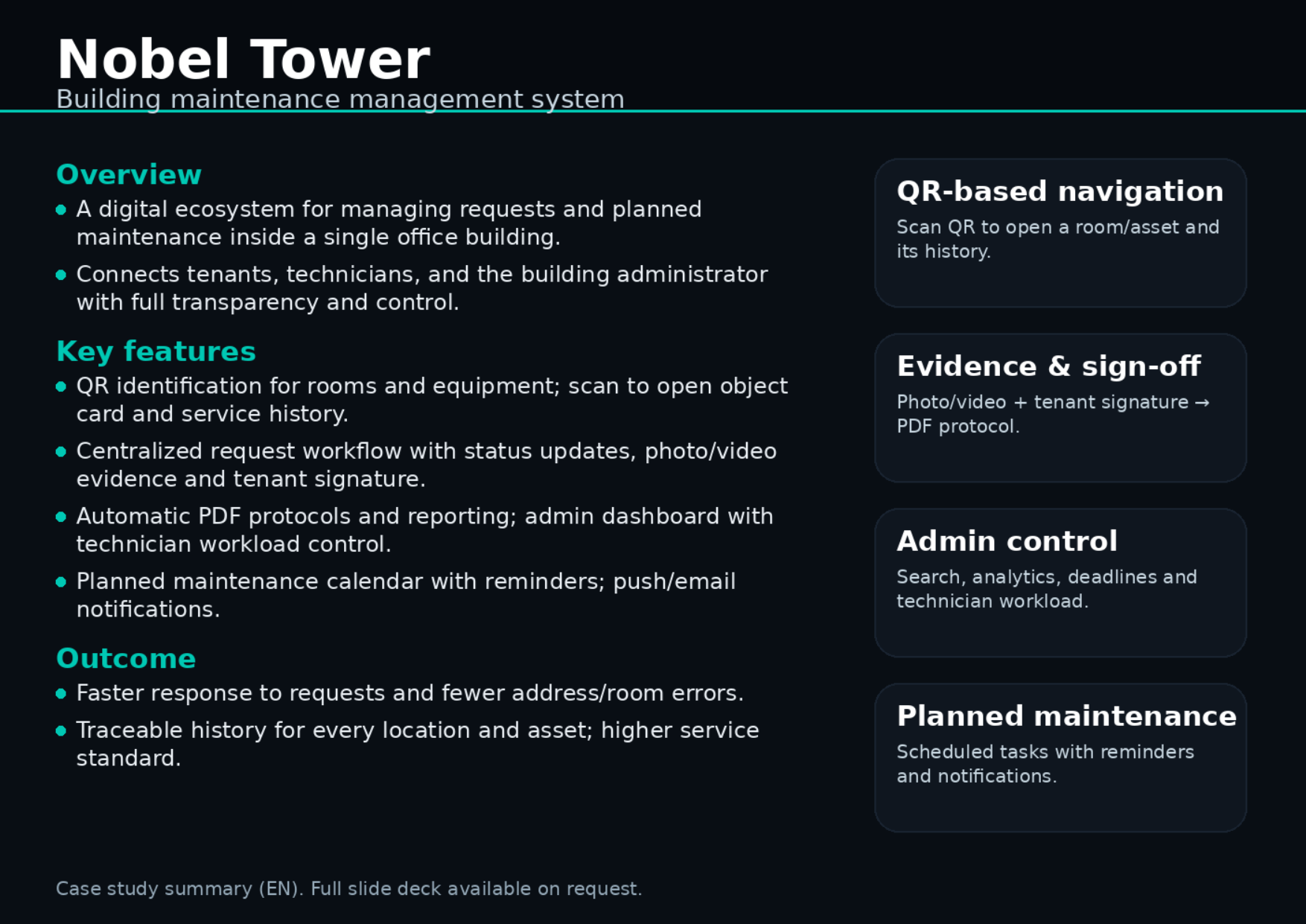

Nobel Tower — System für Gebäudewartungs-Management

Ökosystem für Tickets und geplante Wartung in einem Bürogebäude mit transparenten Workflows für Mieter, Techniker und Admins.

- QR-Identifikation von Räumen/Equipment inkl. Objekt-Historie

- Tickets mit Status, Foto/Video-Nachweisen und Mieter-Bestätigung

- PDF-Protokolle/Reporting und Admin-Panel

- Geplante Wartung: Kalender, Erinnerungen, Push/E-Mail

local-meet-translator

Self-hosted prototype for near real-time translation of web calls (Google Meet / Zoom Web / Teams Web).

The OpenAI key is not stored in the extension: all requests go through a localhost bridge service protected by a token.

Details

Key idea

- The OpenAI API key is stored only locally (.env on the PC).

- The browser extension uses only a local token (X-Auth-Token), not the OpenAI key.

Tech stack

- Extension: JavaScript/HTML/CSS, Chrome Extensions MV3 (tabCapture, getUserMedia, offscreen, content_script).

- Local Bridge: Java 21, HttpServer/HttpClient (JDK), Jackson, Maven (shade-plugin), PowerShell/Batch startup.

Incoming translation (subtitles)

- tabCapture → MediaRecorder chunks (OGG/Opus preferred).

- POST /transcribe-and-translate → OpenAI STT (whisper-1) → translation via /v1/responses (gpt-4o-mini, temperature=0).

- Overlay subtitles on top of the call page (content_script).

Outgoing voice translation (TTS)

- Microphone via getUserMedia → chunks → transcribe/translate.

- POST /tts → OpenAI TTS (/v1/audio/speech, gpt-4o-mini-tts, mp3).

- Play back into a virtual audio cable (e.g., VB-Audio Virtual Cable) so Meet can use it as a microphone.

Safeguards

- VAD / silence threshold (RMS): silent chunks are not sent for ASR.

- Mute mic during TTS: mic chunks are ignored while TTS is playing.

- Text deduplication: similar ASR outputs within a short window are skipped.

Local API (127.0.0.1)

- Requires X-Auth-Token == LOCAL_MEET_TRANSLATOR_TOKEN.

- GET /health, POST /translate-text, POST /transcribe-and-translate, POST /tts (ENABLE_TTS=true).

Configuration (env)

- OPENAI_API_KEY, LOCAL_MEET_TRANSLATOR_PORT (8799), LOCAL_MEET_TRANSLATOR_TOKEN.

- OPENAI_TRANSCRIBE_MODEL (whisper-1), OPENAI_TEXT_MODEL (gpt-4o-mini).

- ENABLE_TTS, OPENAI_TTS_MODEL (gpt-4o-mini-tts).